In May, experts from many fields gathered in Montenegro to discuss "Existential Threats and Other Disasters: How Should We Address Them." The term "existential risk" was popularised in a 2002 essay by the philosopher Nick Bostrom, who defined it as referring to risks such that "an adverse outcome would either annihilate Earth-originating intelligent life, or permanently and drastically curtail its potential".

To appreciate the distinction between existential risks and other disasters, consider climate change. In some scenarios, runaway global warming could render most of the Earth too hot for humans to continue to live there, but Antarctica and some of the northernmost regions of Europe, Asia, and North America would become inhabitable.

If these are indeed the worst-case scenarios, then climate change, disastrous as it could be, is not an existential risk.

To be sure, if the seriousness of a disaster is directly proportional to the number of people it kills, the difference between a disaster that kills nearly all of Earth's human population and one that brings about its extinction would not be so great.

For many philosophers concerned about existential risk, however, this view fails to consider the vast number of people who would come into existence if our species survived for a long time but would not if Homo sapiens became extinct.

The Montenegro conference, by referring in its title to "other disasters", was not limited to existential risks, but much of the discussion was about them.

As the final session of the conference was drawing to a close, some of those present felt that the issues we had been discussing were so serious, and yet so neglected, that we should seek to draw public attention, and the attention of governments, to the topic.

The general tenor of such a statement was discussed, and I was a member of a small group nominated to draft it.

The statement notes that there are serious risks to the survival of humankind, most of them created by human beings, whether intentionally, like bioterrorism, or unintentionally, like climate change or the risk posed by the creation of an artificial superintelligence that is not aligned with our values.

These risks, the statement goes on to say, are not being treated by governments with anything like the seriousness or urgency that they deserve.

The statement supports its view with reference to two claims made by Toby Ord in his 2020 book, The Precipice. Ord estimated the probability of our species becoming extinct in the next 100 years to 16-17%, or one in six. He also estimated that the proportion of world GDP that humanity spends on interventions aimed at reducing this risk is less than 0.001%.

In an update that appeared in July, Ord says that because new evidence suggests that the most extreme climate-change scenarios are unlikely, the existential risk posed by climate change is less than he thought it was in 2020.

On the other hand, the war in Ukraine means that the risk of nuclear war causing our extinction is higher, while the risks from superintelligent AI and pandemics are, in his view, lower in some respects and higher in others.

Ord sees the focus on chatbots as taking AI in a less dangerous direction because chatbots are not agents. But he regards the increased competition in the race to create advanced artificial general intelligence as likely to lead to cutting corners on AI safety.

Overall, Ord has not changed his estimate -- which he admits is very rough -- that there is a one in six chance that our species will not survive the next 100 years. He welcomes the fact that there is now increased global interest in reducing the risks of extinction and offers, as examples, the inclusion of the topic in the 2021 report of the Secretary-General of the United Nations and its prominence on the agenda of the international group of former world leaders known as The Elders.

The Montenegro statement urges governments to work cooperatively to prevent existential disasters and calls especially on affluent countries' governments to invest "significant resources" in finding the best ways to reduce risks of human extinction. Although the statement gives no indication of what "significant" means in this context, Ord has elsewhere suggested a commitment of 1% of global GDP to reduce the risks of our species becoming extinct. That is a thousand times more than his 2020 estimate of how much governments were then spending on this task, but it would be hard to argue that it is too much. ©2024 Project Syndicate

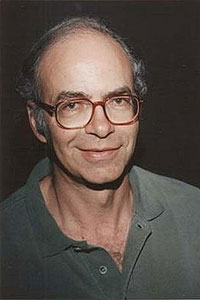

Peter Singer, Founder of the organization The Life You Can Save, is Emeritus Professor of Bioethics at Princeton University and author of 'Animal Liberation', 'Practical Ethics', 'The Life You Can Save', 'The Most Good You Can Do,' and a co-author (with Shih Chao-Hwei) of 'The Buddhist and the Ethicist' (Shambhala Publications, 2023).